Below is a list of the Borland documentation that BitSavers added in 2022, ordered by relevance to me (and how I finally asked Peter Sawatzki if he still had the monochrome TDVIDEO.DLL he wrote for Turbo Debugger 3.0 for Windows):

Archive for the ‘x86’ Category

BitSavers added some more Borland documentation in 2022 (most relevant for me: Assembler, Debugger, Profiler)

Posted by jpluimers on 2024/02/28

Posted in 8087, Algorithms, Assembly Language, Borland C++, C, C++, Debugging, Development, Floating point handling, Profiling, Software Development, Turbo Assembler, Turbo C, Turbo Debugger, Turbo Profiler, x86 | Leave a Comment »

The fundamentals of programming, a thread by @isotopp on Twitter

Posted by jpluimers on 2023/03/22

Kristian Kohntöpp publishes great DevOps related threads on Twitter. [Wayback/Archive] Thread by @isotopp “I am Kris, and I am 53 now. I learned programming on a Commodore 64 in 1983. My first real programming language (because C64 isn’t one) was 6502 assembler, forwards and backwards. “ is his response, about a year and a half ago, to a request by Julia Evans (@b0rk) that I also saved: [Wayback/Archive] Thread by @b0rk on Thread Reader App – Thread Reader App.

Her request: [Archive] 🔎Julia Evans🔍 on Twitter: “if you’ve been working in computing for > 15 years — are there fundamentals that you learned “on the job” 15 years ago that you think most people aren’t learning on the job today? (I’m thinking about how for example nobody has ever paid me to write C code)” / Twitter followed by [Archive] 🔎Julia Evans🔍 on Twitter: “I’m especially interested in topics that are still relevant today (like C programming) but are just harder to pick up at work now than they used to be” / Twitter.

The start of his thread is [Archive] Kris on Twitter: “@b0rk I am Kris, and I am 53 now. I learned programming on a Commodore 64 in 1983. My first real programming language (because C64 isn’t one) was 6502 assembler, forwards and backwards.” / Twitter.

Kristian’s story is very similar to mine, though I sooner stepped up the structured programming language ladder as at high school, I had access to an Apple //e with a Z80 card (yes, the SoftCard), so could run CP/M with Turbo Pascal 1.0 (later 2.0 and 3.0) which I partly described in The calculators that got me into programming (via: calculators : Algorithms for the masses – julian m bucknall), followed by early access at the close by university to PC’s running on 8086 and up. The computer science lab, now called Snellius, but back then known as CRI for Centraal RekenInstituut – is now had an educational deal with IBM, which means they switched from the PC/XT to the PC/AT with a 80286 processor as soon as the latter came out).

Posted in 6502 Assembly, Assembly Language, Development, ESP32, ESP8266, Software Development, x86 | Leave a Comment »

Very useful link: Software optimization resources. C++ and assembly. Windows, Linux, BSD, Mac OS X

Posted by jpluimers on 2023/02/14

If I ever need to go deep into optimisation again, there is lots I can still learn from [Wayback/Archive] Software optimization resources. C++ and assembly. Windows, Linux, BSD, Mac OS X

Thanks [Archive] Kris on Twitter: “@Kharkerlake @unixtippse Agner Fog ist eigentlich ein Anthropologe, aber er reversed interne Strukturen von Intel CPUs, und …, speziell 3. The microarchitecture of Intel, AMD and VIA CPUs: An optimization guide for assembly programmers and compiler makers ist die HPC Bibel.” / Twitter!

Must watch video with Agner about Warlike and Peaceful Societies below the signature.

–jeroen

Posted in Assembly Language, C++, Development, Software Development, x64, x86 | Leave a Comment »

When floating point code suddenly becomes orders magnitudes slower (via C++ – Why does changing 0.1f to 0 slow down performance by 10x? – Stack Overflow)

Posted by jpluimers on 2022/01/26

When working with converging algorithms, sometimes floating code can become very slow. That is: orders of magnitude slower than you would expect.

A very interesting answer to [Wayback] c++ – Why does changing 0.1f to 0 slow down performance by 10x? – Stack Overflow.

I’ve only quoted a few bits, read the full question and answer for more background information.

Welcome to the world of denormalized floating-point! They can wreak havoc on performance!!!

Denormal (or subnormal) numbers are kind of a hack to get some extra values very close to zero out of the floating point representation. Operations on denormalized floating-point can be tens to hundreds of times slower than on normalized floating-point. This is because many processors can’t handle them directly and must trap and resolve them using microcode.

If you print out the numbers after 10,000 iterations, you will see that they have converged to different values depending on whether

0or0.1is used.

Basically, the convergence uses some values closer to zero than a normal floating point representation dan store, so a trick is used called “denormal numbers or denormalized numbers (now often called subnormal numbers)” as described in Denormal number – Wikipedia:

…

In a normal floating-point value, there are no leading zeros in the significand; rather, leading zeros are removed by adjusting the exponent (for example, the number 0.0123 would be written as 1.23 × 10−2). Denormal numbers are numbers where this representation would result in an exponent that is below the smallest representable exponent (the exponent usually having a limited range). Such numbers are represented using leading zeros in the significand.

…

Since a denormal number is a boundary case, many processors do not optimise for this.

–jeroen

Posted in .NET, Algorithms, ARM, Assembly Language, C, C#, C++, Delphi, Development, Software Development, x64, x86 | Leave a Comment »

Some notes on loosing performance because of using AVX

Posted by jpluimers on 2019/03/20

It looks like AVX can be a curse most of the times. Below are some (many) links that lead me to this conclusion, based on a thread started by Kelly Sommers.

My conclusion

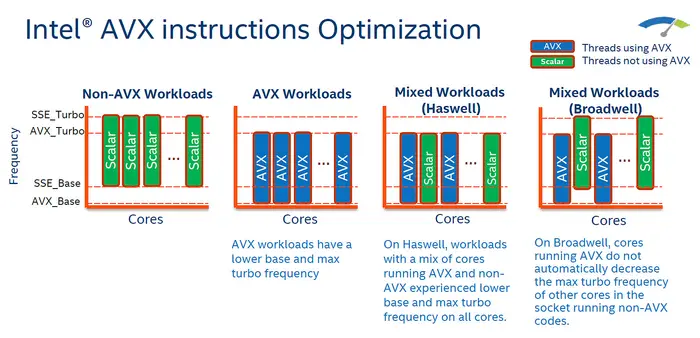

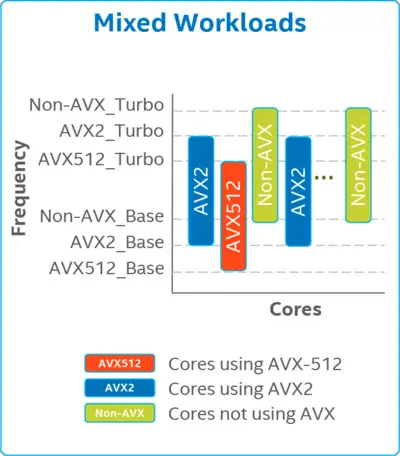

Running AVX instructions will affect the processor frequency, which means that non-AVX code will slow down, so you will only benefit when the gain of using AVX code outweighs the non-AVX loss on anything running on that processor in the same time frame.

In practice, this means you need to long term gain from AVX on many cores. If you don’t, then the performance penalty on all cores, including the initial AVX performance, will degrade, often a lot (dozens of %).

Tweets and pages linked by them

- [WayBack] Kelly Sommers on Twitter: “So here’s a real question. What does Amazon and Microsoft and other kubernetes cloud services do to prevent your containers from losing 11ghz of performance because someone deployed some AVX optimized algorithm on the same host?”

- [WayBack] Jeroen Pluimers on Twitter: “Where do I learn more on side effects of AVX?… “

- [WayBack] Kelly Sommers on Twitter: “Not the greatest link but the quickest one I found lol https://t.co/NUvEl1CEp5… “

- [WayBack] E-class CPUs down clock when AVX is in the execution stack? Is this true, if so why would it?

- The Core i7 processors that are referred to as “Haswell-E” and “Broadwell-E” are minor variants of the Xeon E5 v3 “Haswell-EP” and Xeon E5 v4 “Broadwell-EP” processors. These have lower “maximum Turbo” frequencies for each core count when 256-bit registers are being used.

- Certain AVX workloads may run at lower peak turbo frequencies, or drop below the Non-AVX Base Frequency of the SKU. This type of behavior is due to power, thermal, and electrical constraints.

- [WayBack] PDF: Optimizing Performance with Intel® Advanced Vector Extensions

- [WayBack] E-class CPUs down clock when AVX is in the execution stack? Is this true, if so why would it?

- [WayBack] 🤓science_dot on Twitter: “https://t.co/YAZWbuo9Mn contains the frequency tables for Skylake Xeon for non-AVX, AVX2 and AVX-512. There are nuances that don’t fit into a tweet… (#ImIntel)… https://t.co/vSRFSd9GAb”

- [WayBack] Phil Dennis-Jordan on Twitter: “Basically, PC games don’t use AVX for this exact reason. AVX is great if >90% of your CPU time is spent in AVX code across all cores, for long-lasting workloads. Which pretty much means it gets used for HPC and maybe CPU-intensive content creation and that’s about it.… https://t.co/HMfmhPeSHH”

- [WayBack] Phil Dennis-Jordan on Twitter: “As soon as you use an AVX instruction on recent Intel CPUs, you trip its protective AVX clock circuitry. This means that for the next N milliseconds, it’s limited to whatever the rated maximum AVX clock rate is.… https://t.co/2poSaQDmXF”

- [WayBack] Phil Dennis-Jordan on Twitter: “It doesn’t matter if you’re pegging the core for long running calculations or just briefly switched to AVX because it has a convenient instruction. For this defined amount of time, anything running will be subject to the AVX clock limit.… https://t.co/ZxgXAzbu3R”

- [WayBack] svacko on Twitter: “also check wikichip that has perfect resources on this AVX/AVX2/AVX512 downscaling https://t.co/1nn3Wn1dO1 i was also pretty shocked when i found this as we are massively using AVX2 in our shop.. i think there are no GHz guarantees, the clouders guarantees you only vCPUs…… https://t.co/UwDTsmWT3W”

- [WayBack] Frequency Behavior – Intel – WikiChip: The Frequency Behavior of Intel’s CPUs is complex and is governed by multiple mechanisms that perform dynamic frequency scaling based on the available headroom.

- [WayBack] Frequency Behavior – Intel – WikiChip: The Frequency Behavior of Intel’s CPUs is complex and is governed by multiple mechanisms that perform dynamic frequency scaling based on the available headroom.

- [WayBack] Ben Adams on Twitter: “Cloudflare did write up about AVX2: On the dangers of Intel’s frequency scaling https://t.co/JYbhjTwiD0… “

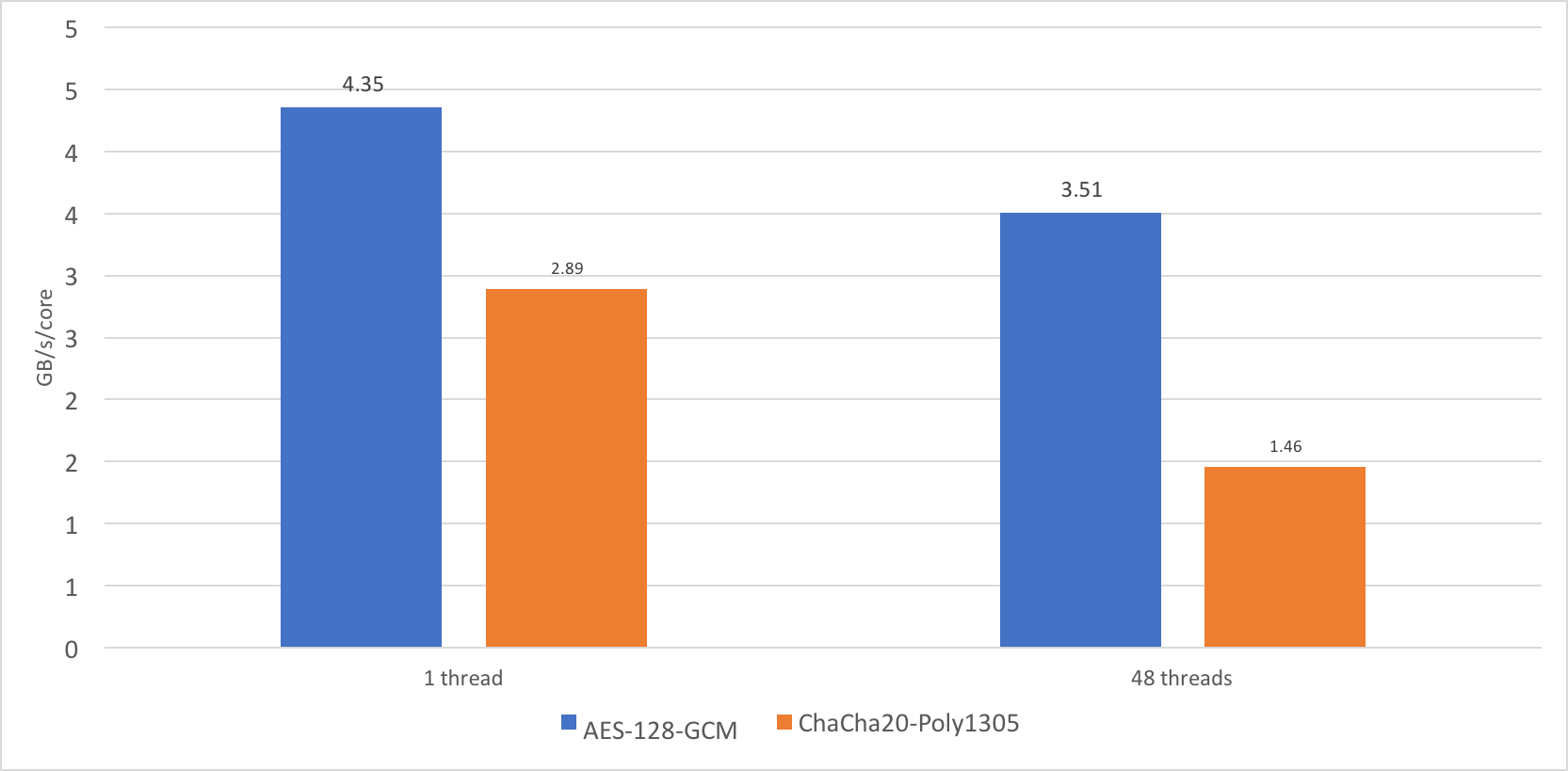

- [WayBack] On the dangers of Intel’s frequency scaling: While I was writing the post comparing the new Qualcomm server chip, Centriq, to our current stock of Intel Skylake-based Xeons, I noticed a disturbing phenomena. When benchmarking OpenSSL 1.1.1dev, I discovered that the performance of the cipher ChaCha20-Poly1305 does not scale very well.

- [WayBack] On the dangers of Intel’s frequency scaling: While I was writing the post comparing the new Qualcomm server chip, Centriq, to our current stock of Intel Skylake-based Xeons, I noticed a disturbing phenomena. When benchmarking OpenSSL 1.1.1dev, I discovered that the performance of the cipher ChaCha20-Poly1305 does not scale very well.

- [WayBack] Vlad Krasnov on Twitter: “https://t.co/gtcQHjJFLQ When compiling with -mavx512dq it runs 25% on a Gold with 40 threads On Silver with 24 threads: ~30% slower… https://t.co/UmwyYY0ISR”

- [WayBack] Ryan Zezeski on Twitter: “I haven’t done much reading (or any testing) about this myself, but I did find this summary interesting: https://t.co/Yjsh8kB789… https://t.co/MZqBXlkiHz”

- [WayBack] avx_sigh.md · GitHub

why doesn’t radfft support AVX on PC?

- The short version is that unless you’re planning to run AVX-intensive code on that core for at least the next 10ms or so, you are completely shooting yourself in the foot by using AVX float.A complex RADFFT at N=2048 (relevant size for Bink Audio, Miles sometimes uses larger FFTs) takes about 19k cycles when computed 128b-wide without FMAs. That means that the actual FFT runs and completes long before we ever get the higher power license grant, and then when we do get the higher power license, all we’ve done is docked the core frequency by about 15% (25%+ when using AVX-512) for the next couple milliseconds, when somebody else’s code runs.That’s a Really Bad Thing for middleware to be doing, so we don’t.

- These older CPUs are somewhat faster to grant the higher power license level (but still on the order of 150k cycles), but if there is even one core using AVX code, all cores (well, everything on the same package if you’re in a multi-socket CPU system) get limited to the max AVX frequency.And they don’t seem to have the “light” vs. “heavy” distinction either. Use anything 256b wide, even a single instruction, and you’re docking the max turbo for all cores for the next couple milliseconds.That’s an even worse thing for middleware to be doing, so again, we try not to.

- [WayBack] avx_sigh.md · GitHub

- [WayBack] Ben Higgins on Twitter: “I’d have to hunt down the specifics but we saw a perf regression we attributed to AVX512 additions added to glibc. Disabling the AVX512 version improved perf for our app.… https://t.co/IZIzuBO2F8”

- [WayBack] Bartek Ogryczak on Twitter: “TIL, thanks for mentioning this. Intel is saying “workaround: none, fix: none” 😱 https://t.co/6BVJqV8rff… https://t.co/STEbs6M2tK”

- [WayBack] Ben Higgins on Twitter: “My colleagues tracked it down to an avx512-specific variant of memmove in glibc, despite not calling it very often we saw a big speedup on skylake when we stopped using the avx512 version.… https://t.co/vz2u8dCKbX”

- [WayBack] Trent Lloyd 🦆on Twitter: “Though not quite the same issue, this bug has some really interesting insight into reasons you can kill performance using various variants of SSE/AVX… https://t.co/pNghRVJZO0 – was fascinating to me.… https://t.co/b3jb9WFWne”

- [WayBack] Bug #1663280 “Serious performance degradation of math functions” : Bugs : glibc package : Ubuntu

- [WayBack] Trent Lloyd 🦆 on Twitter: “Super short version was that mixing SSE (128-bit) and AVX-256 which uses the same registers but “extended” to 256bits on some CPUs requires the CPU to save the register state between calls. glibc and the application were interchangeably using SSE/AVX which ruined performance 4x+… https://t.co/SlWRgEHtMF”

- [WayBack] Daniel Lemire on Twitter: “… this applies only to older Intel CPUs. The description is somewhat misleading.… “

- [WayBack] Daniel Lemire on Twitter: “AMD processors and Intel processors from Skylake and up are unaffected. It is definitively not the case that it affects all AVX-capable processors. And I would argue that the code was poorly crafted to begin with. Have you looked at it?… https://t.co/GMXCYDSjMG”

- [WayBack] Trent Lloyd 🦆 on Twitter: “Yeah I left that detail out on account of trying to being short but that was probably a disservice. It is quite relevant that I t’s CPU specific and part of why it can be hard to reproduce the issue for some in that specific case.… https://t.co/N1w9FPNeKQ”

Kelly raised a bunch of interesting questions and remarks because of the above:

- [WayBack] Kelly Sommers on Twitter: “If thermal heat is a huge issue with many core processors and turbo boost clock speeds why don’t we just have smaller processors with multiple processors scattered across the motherboard with individual cooling? It makes no sense to turbo boost 1 of 10 cores.”

- [WayBack] Kelly Sommers on Twitter: “Here’s what I consider a real problem about multi-tenant or multi-workload containerization like Kubernetes Let’s say you deploy a bunch of containers to some nodes. I deploy a HTTP server that is highly optimized with AVX and all the other cores down clock to prevent melting.”

- [WayBack] Kelly Sommers on Twitter: “You get paged at 3am because I just ripped 1ghz multiplied by 11 and stole 11ghz worth of performance from the rest of the system.”

- [WayBack] Kelly Sommers on Twitter: “So here’s a real question. What does Amazon and Microsoft and other kubernetes cloud services do to prevent your containers from losing 11ghz of performance because someone deployed some AVX optimized algorithm on the same host?”

- [WayBack] Kelly Sommers on Twitter: “How do and how will containerized orchestration systems like Kubernetes enforce isolation from the tricks Intel CPU’s do to prevent from melting when highly optimized code is running in a tight loop?”

- [WayBack] Kelly Sommers on Twitter: “I could just start deploying really small containers all over Amazon and Azure requesting very cheap 1 milli CPU resources in Kubernetes and have a tight AVX loop and trip the Intel thermal kick stand and make all that hosts and your containers drop significantly in performance.”

- [WayBack] Kelly Sommers on Twitter: “If you have a Kubernetes cluster or you are a cloud provider of Kubernetes like Amazon or Azure and have turbo boost enabled or SSE/AVX enabled it seems to me you’re vulnerable to attacks that cripple all CPU cores on the system.”

- [WayBack] Kelly Sommers on Twitter: “Feels to me CPU’s were designed for single threaded performance and then we slapped 8, 10, 12, 16 of them together as cores into a single package and it started to get too hot so now the competing designs fight against each other. It’s no longer a wholistic thought out design.”

- [WayBack] Kelly Sommers on Twitter: “Noisey neighbours has always been a problem, mostly due to CPU cache and memory bandwidth sharing but more than ever CPU’s are designed in such a way it’s easy to exploit it’s thermal protections in a heavy handed way that affects all cores very significantly.”

- [WayBack] Kelly Sommers on Twitter: “Another similar attack would be code designed to cause CPU instruction prediction pipelines to invalidate constantly affecting all code run on a CPU core. This is a much harder attack than the AVX one. You could in theory continuously flush the deep instruction pipeline.”

I collected the above links because of [WayBack] GitHub – maximmasiutin/FastMM4-AVX: FastMM4 fork with AVX support and multi-threaded enhancements (faster locking), where it is unclear which parts of the gains are because of AVX and which parts are because of other optimizations. It looks like that under heavy loads on data center like conditions, the total gain is about 30%. The loss for traditional processing there has not been measured, but from the above my estimate it is at least 20%.

Full tweets below.

Posted in Assembly Language, Development, Software Development, x64, x86 | Leave a Comment »

performance – Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture? – Stack Overflow

Posted by jpluimers on 2019/02/28

Geek pr0n at [WayBack] performance – Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture? – Stack Overflow

Via: [WayBack] Very nice #Geekpr0n “Why is C++ faster than my hand-written assembly code?” The comments are of high quality i… – Jan Wildeboer – Google+

–jeroen

Posted in Assembly Language, C, C++, Development, Software Development, x64, x86 | Leave a Comment »

A refefernce to 6502 by “Remember that in a stack trace, the addresses are return addresses, not call addresses – The Old New Thing”

Posted by jpluimers on 2018/09/11

On x86/x64/ARM/…:

It’s where the function is going to return to, not where it came from.

And:

Bonus chatter: This reminds me of a quirk of the 6502 processor: When it pushed the return address onto the stack, it actually pushed the return address minus one. This is an artifact of the way the 6502 is implemented, but it results in the nice feature that the stack trace gives you the line number of the call instruction.

Of course, this is all hypothetical, because 6502 debuggers didn’t have fancy features like stack traces or line numbers.

Source: [WayBack] Remember that in a stack trace, the addresses are return addresses, not call addresses – The Old New Thing

Which resulted in these comments at [WayBack] CC +mos6502 – Jeroen Wiert Pluimers – Google+:

- mos6502: And don’t forget the crucial difference in PC on 6502 between RTS and RTI!

- Jeroen Wiert Pluimers: +mos6502 I totally forgot about that one. Thanks for reminding me

<<Note that unlike RTS, the return address on the stack is the actual address rather than the address-1.>>

References:

[WayBack] 6502.org: Tutorials and Aids – RTI

RTI retrieves the Processor Status Word (flags) and the Program Counter from the stack in that order (interrupts push the PC first and then the PSW).

Note that unlike RTS, the return address on the stack is the actual address rather than the address-1.

[WayBack] 6502.org: Tutorials and Aids – RTS

RTS pulls the top two bytes off the stack (low byte first) and transfers program control to that address+1. It is used, as expected, to exit a subroutine invoked via JSR which pushed the address-1.

RTS is frequently used to implement a jump table where addresses-1 are pushed onto the stack and accessed via RTS eg. to access the second of four routines.

–jeroen

Posted in 6502, 6502 Assembly, Assembly Language, Development, History, Software Development, The Old New Thing, Windows Development, x64, x86 | Leave a Comment »

How to check if a binary is 32 or 64 bit on Windows? – Super User

Posted by jpluimers on 2018/08/17

It seems there are a few, but only loading the binary is the sure method to know what the process will be using: [WayBack] How to check if a binary is 32 or 64 bit on Windows? – Super User and [WayBack] How do I determine if a .NET application is 32 or 64 bit? – Stack Overflow.

Details in the answers of these questions, here are a few highlights:

- The first few characters in the binary header reveal what it was originally designed for.

- A .NET executable might still have an x64 header for bootstrapping.

- The Windows SDK has a tool

dumpbin.exewith the/headersoption. - You can use

sigcheck.exefrom SysInternals. - The

fileutility (e.g. from cygwin, which comes with msysgit) will distinguish between 32- and 64-bit executables. - Use the command line

7z.exeon the PE file (Exe or DLL) in question which gives you aCPUline. - Virustotal

File detailis a way to find out if a binary is 32 bit or 64 bit. - Even an executable marked as 32-bit can run as 64-bit if, for example, it’s a .NET executable that can run as 32- or 64-bit. For more information see https://stackoverflow.com/questions/3782191/how-do-i-determine-if-a-net-application-is-32-or-64-bit, which has an answer that says that the

CORFLAGSutility can be used to determine how a .NET application will run.

–jeroen

Search terms: win64, win32, x64, x86_64, x86

Posted in Assembly Language, Development, Power User, Windows, x64, x86 | Leave a Comment »

Grammar Zoo – Browsable Borland Delphi Assembler Grammar

Posted by jpluimers on 2018/06/12

Interesting: [WayBack] Grammar Zoo – Browsable Borland Delphi Assembler Grammar.

It is very complete, including constructs like the [WayBack] special directives VMTOFFSET and DMTINDEX for Delphi virtual and dynamic methods.

You can contribute to it using https://github.com/slebok/zoo/tree/master/zoo/assembly/delphi

–jeroen

Posted in Assembly Language, Delphi, Development, Software Development, x86 | Leave a Comment »

Mixing x64 Outlook with x86 Delphi: never a good idea…

Posted by jpluimers on 2018/02/07

Via [WayBack] Hi all,I’m a bit stuck here with a “simple” task.Looks like Outlook 2016 doesn’t supports “MAPISendMail”, at least, if i trigger this, Thunderbird… – Attila Kovacs – Google+:

Basically only MAPISendMail works cross architecture and only if you fill all fields.

This edited [WayBack] email – MAPI Windows 7 64 bit – Stack Overflow answer by [WayBack] epotter is very insightful (thanks [WayBack] Rik van Kekem – Google+):

Calls to MAPISendMail should work without a problem.

For all other MAPI method and function calls to work in a MAPI application, the bitness (32 or 64) of the MAPI application must be the same as the bitness of the MAPI subsystem on the computer that the application is targeted to run on.

In general, a 32-bit MAPI application must not run on a 64-bit platform (64-bit Outlook on 64-bit Windows) without first being rebuilt as a 64-bit application.

For a more detailed explanation, see the MSDN page on Building MAPI Applications on 32-Bit and 64-Bit Platforms

–jeroen

Posted in Delphi, Delphi x64, Development, Office, Outlook, Power User, Software Development, x86 | Leave a Comment »